As cyber threats continue to grow in sophistication and frequency, security teams are under immense pressure to detect and respond to incidents quickly and effectively. With the volume of alerts generated every day, analysts can easily become overwhelmed and fatigued. This is where automation and artificial intelligence (AI) can help.

Unless you’re living under a rock, you’ve probably heard of ChatGPT, a large language model trained by OpenAI. You’ve likely also heard about Smart SOAR, our security orchestration, automation, and response (SOAR) platform. By combining the natural language processing capabilities of ChatGPT with the automation and orchestration features of Smart SOAR, security teams can automatically receive valuable context and insights into security incidents, reducing the burden on analysts and improving response times.

In this blog, we’ll explore the benefits of integrating ChatGPT with Smart SOAR, and outline how it can be used in a malware investigation to provide context of the attack, recommend next steps, and write a summary from the investigator’s point of view.

AI-Assisted Kill Chain Investigations

Understanding the origin of an attack, the attacker’s motivations, and where an attacker is likely to be seen next can establish context for an investigation and inform your incident response efforts. Doing this at scale with automation can prevent analyst burnout and streamline investigations.

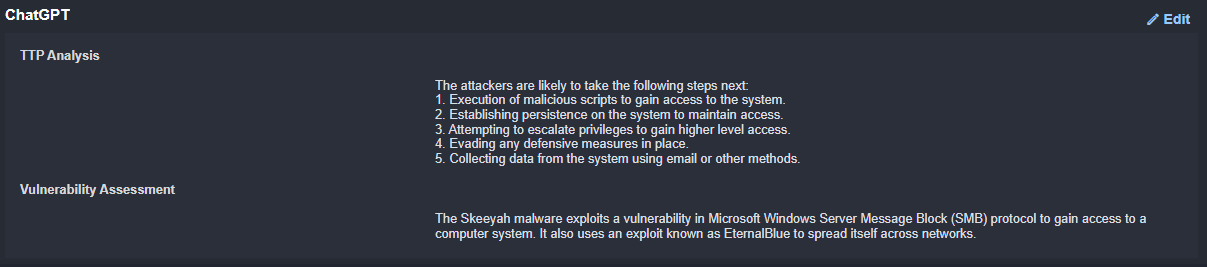

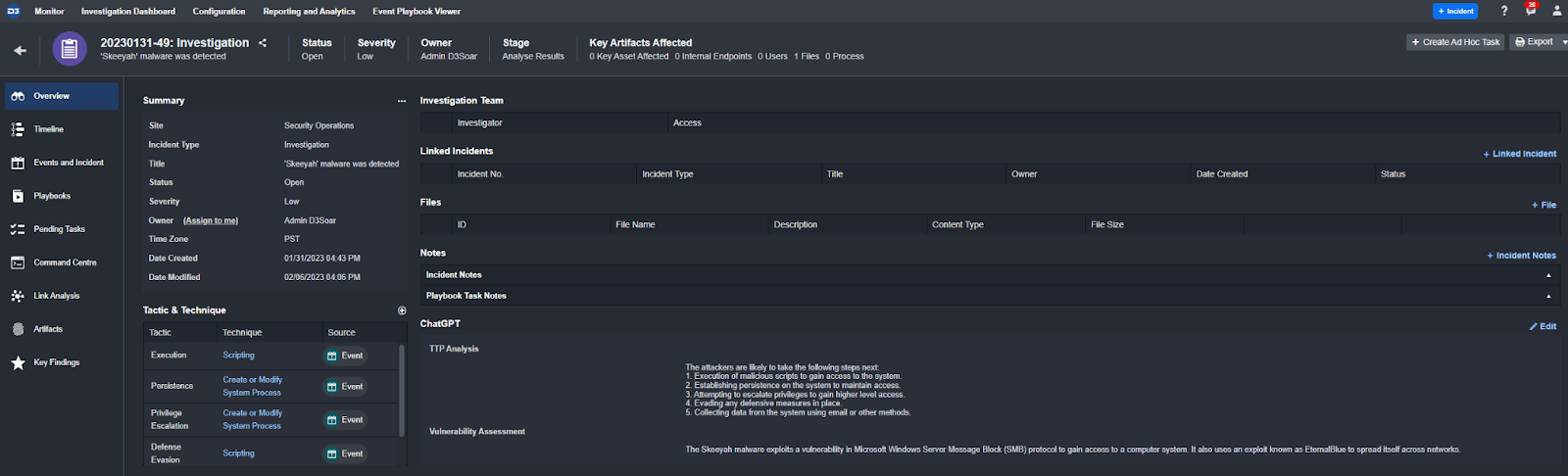

In this example, we’ll use the MITRE TTPs and malware family found in a Microsoft Defender for Endpoint alert to gather contextual information on the incident. Specifically, we’ll ask ChatGPT what next steps the attacker is likely to take and what vulnerability the malware likely exploited, based on what is known about the TTPs and malware.

The results are then automatically collected and displayed to the investigation team in the incident overview:

Response 1:

The attackers are likely to take the following steps next:

-

Execution of malicious scripts to gain access to the system.

-

Establishing persistence on the system to maintain access.

-

Attempting to escalate privileges to gain higher level access.

-

Evading any defensive measures in place.

-

Collecting data from the system using email or other methods.

Response 2:

The Skeeyah malware exploits a vulnerability in Microsoft Windows Server Message Block (SMB) protocol to gain access to a computer system. It also uses an exploit known as EternalBlue to spread itself across networks.

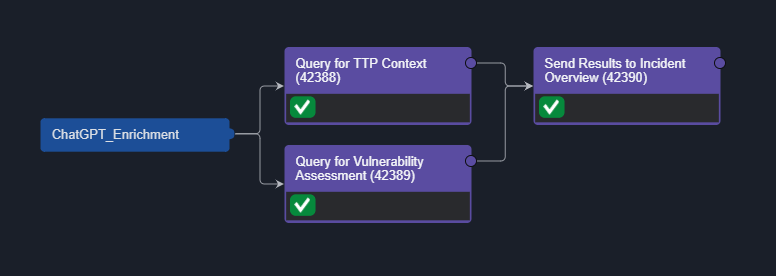

The workflow that executed the queries and displayed the response is simple. Read on for a step-by-step guide to implementing this yourself.

Step 1: Obtain an API Key From OpenAI

Log into OpenAI and go to ‘API Keys’ under ‘User Settings’

Generate a new key and copy it for later.

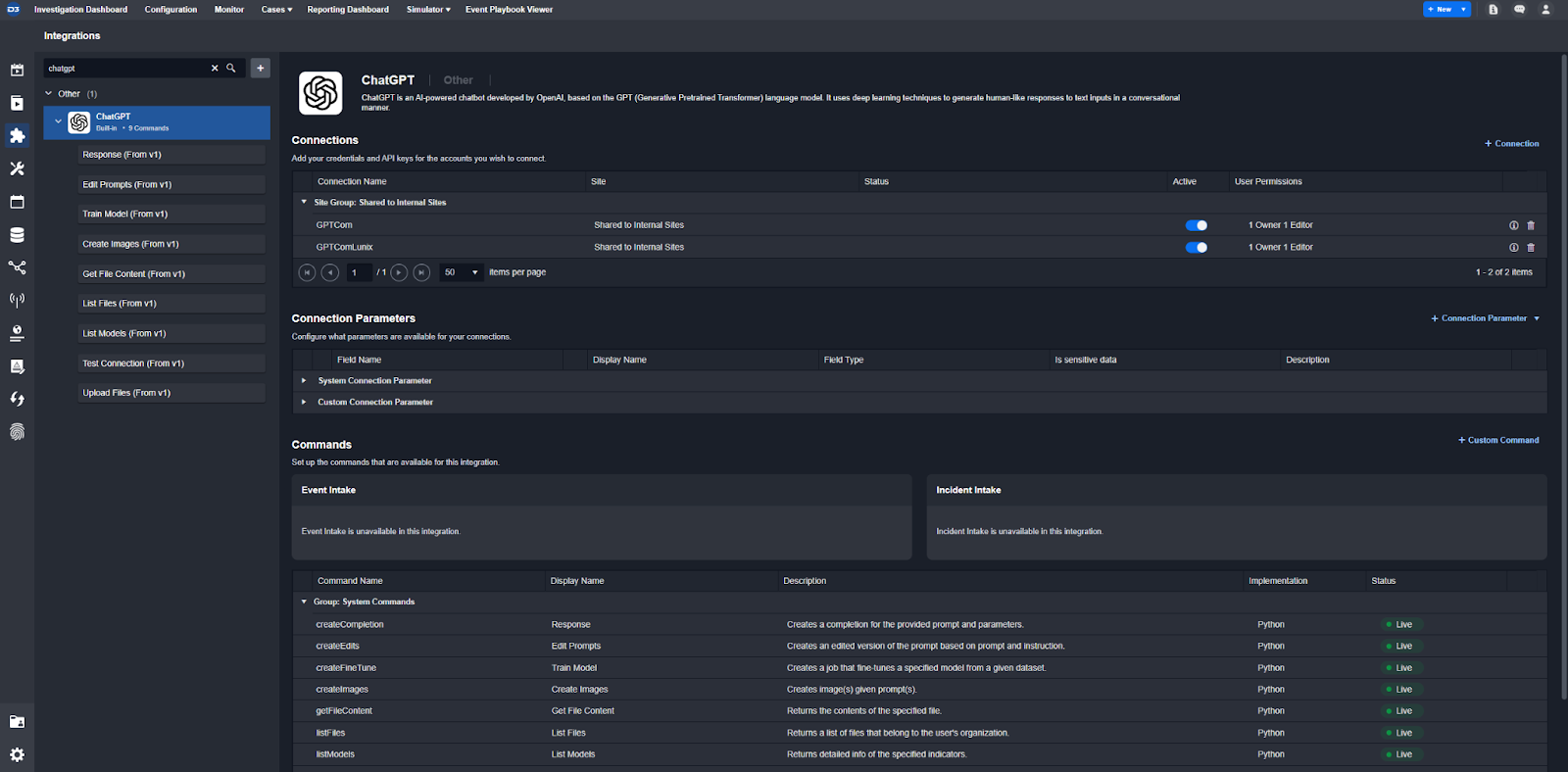

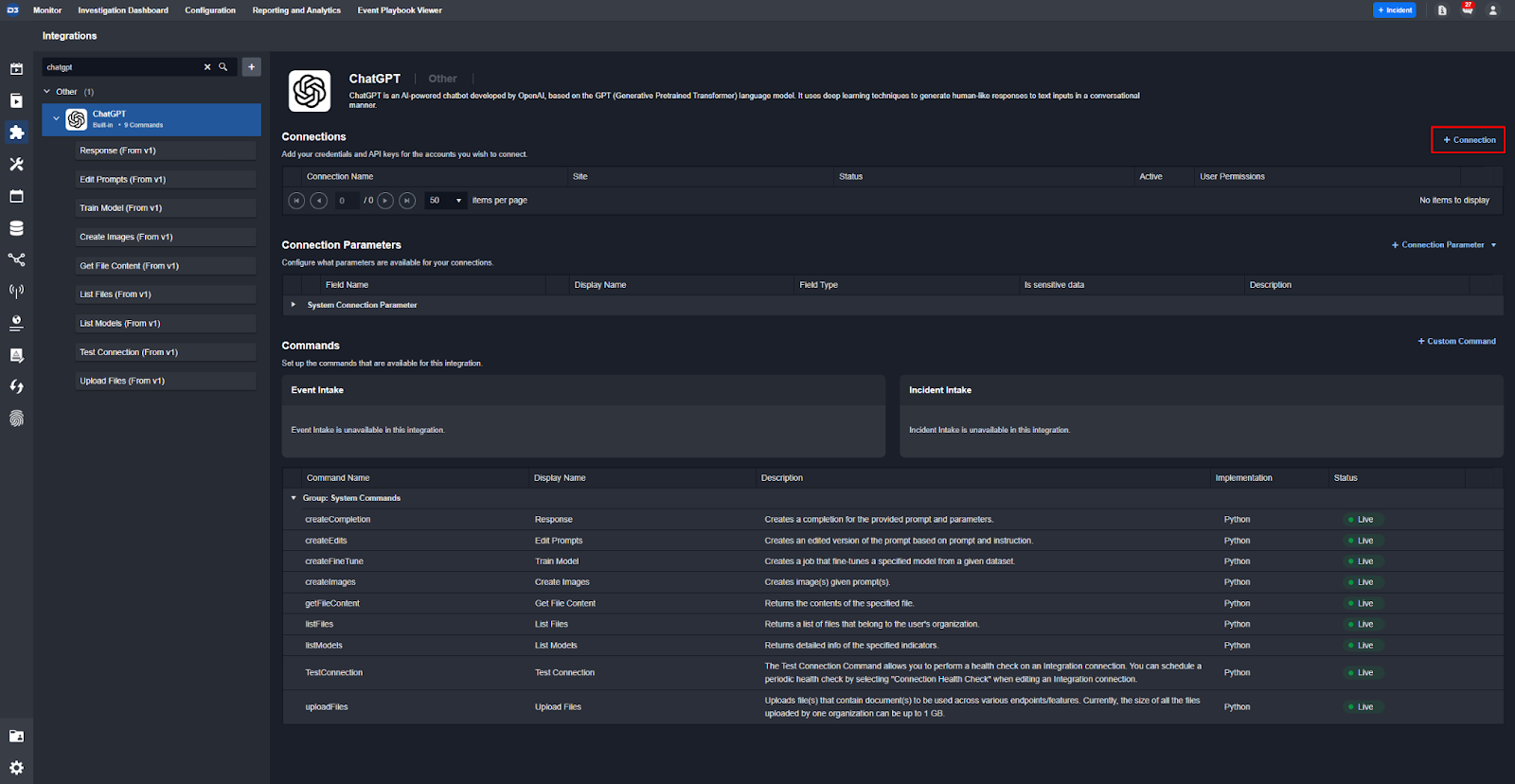

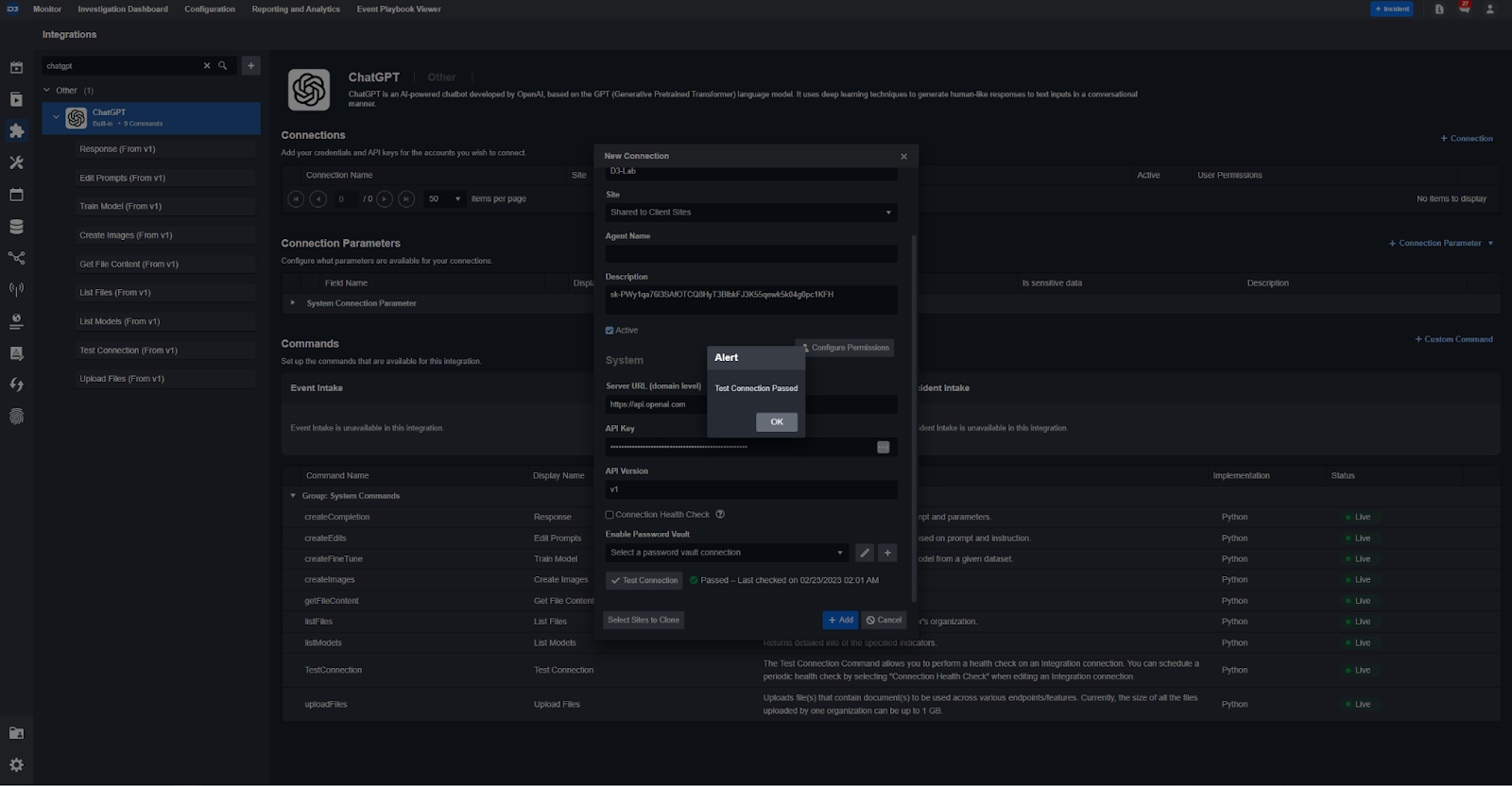

Step 2: Setup the Connection in D3

Navigate to:

- Configurations

- Integrations > ChatGPT

- Select ‘+ Connection’

- Give it a name and add your API key.

- Then select ‘Test Connection’ to make sure everything works.

- Hit Save and it’ll be ready to use.

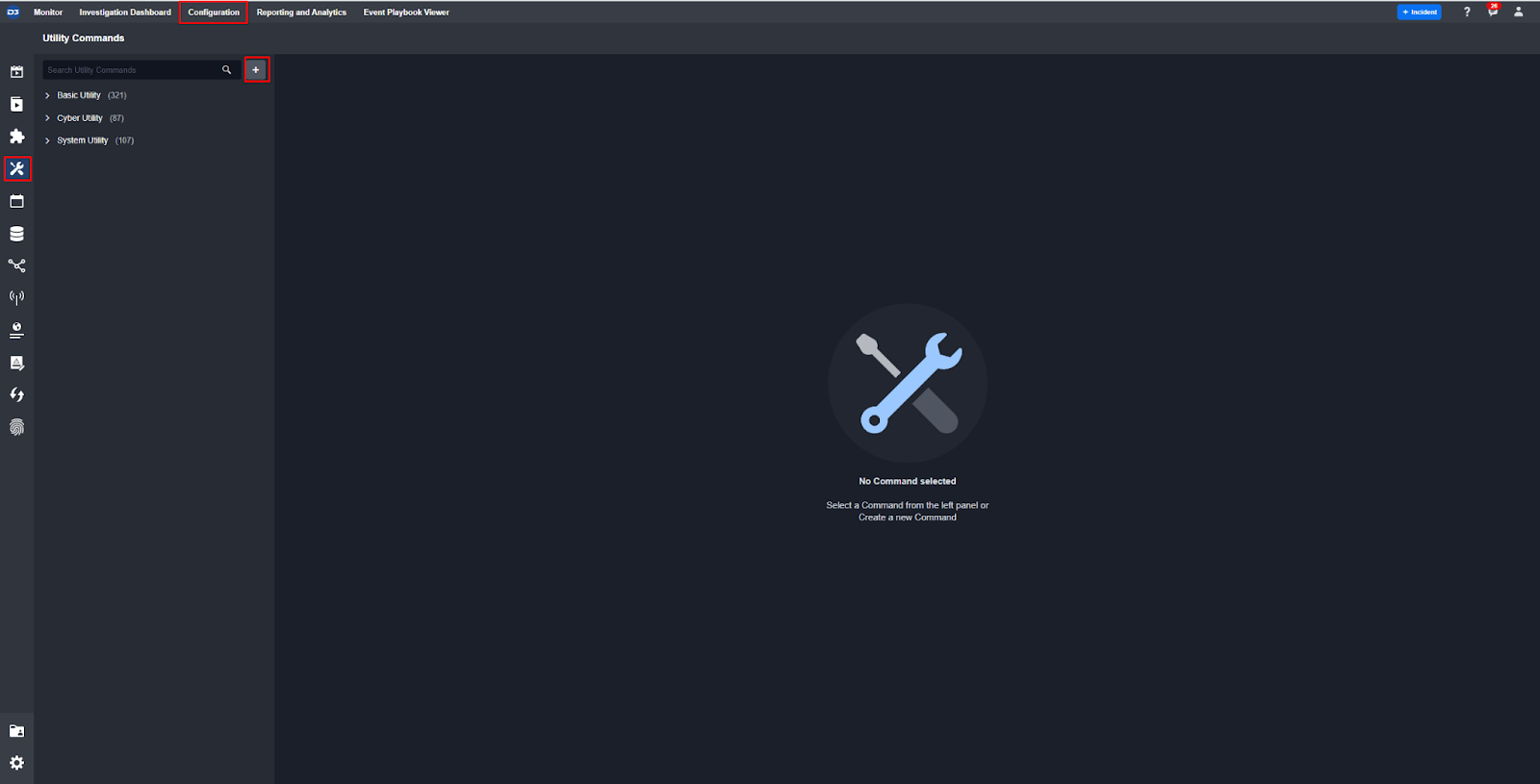

Step 3: Build the Playbook

Now that the connection is ready, go to Configurations > Utility Commands and hit the ‘+’ button. Name it anything you like and select ‘Codeless Playbook’ as the implementation type:

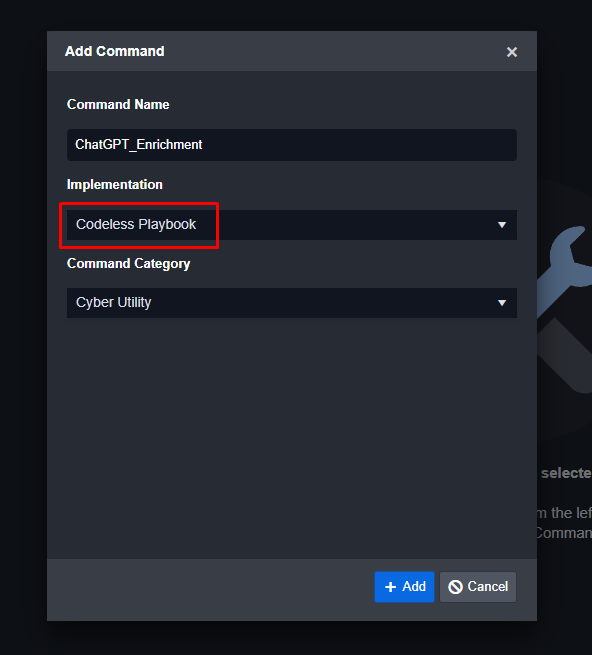

Select ‘Command Task’ and ‘Ad-Hoc’ then navigate to ‘New Task’ next to ‘Overview’

Search for the command you built under Integration Commands then drag and drop it into the playbook editor:

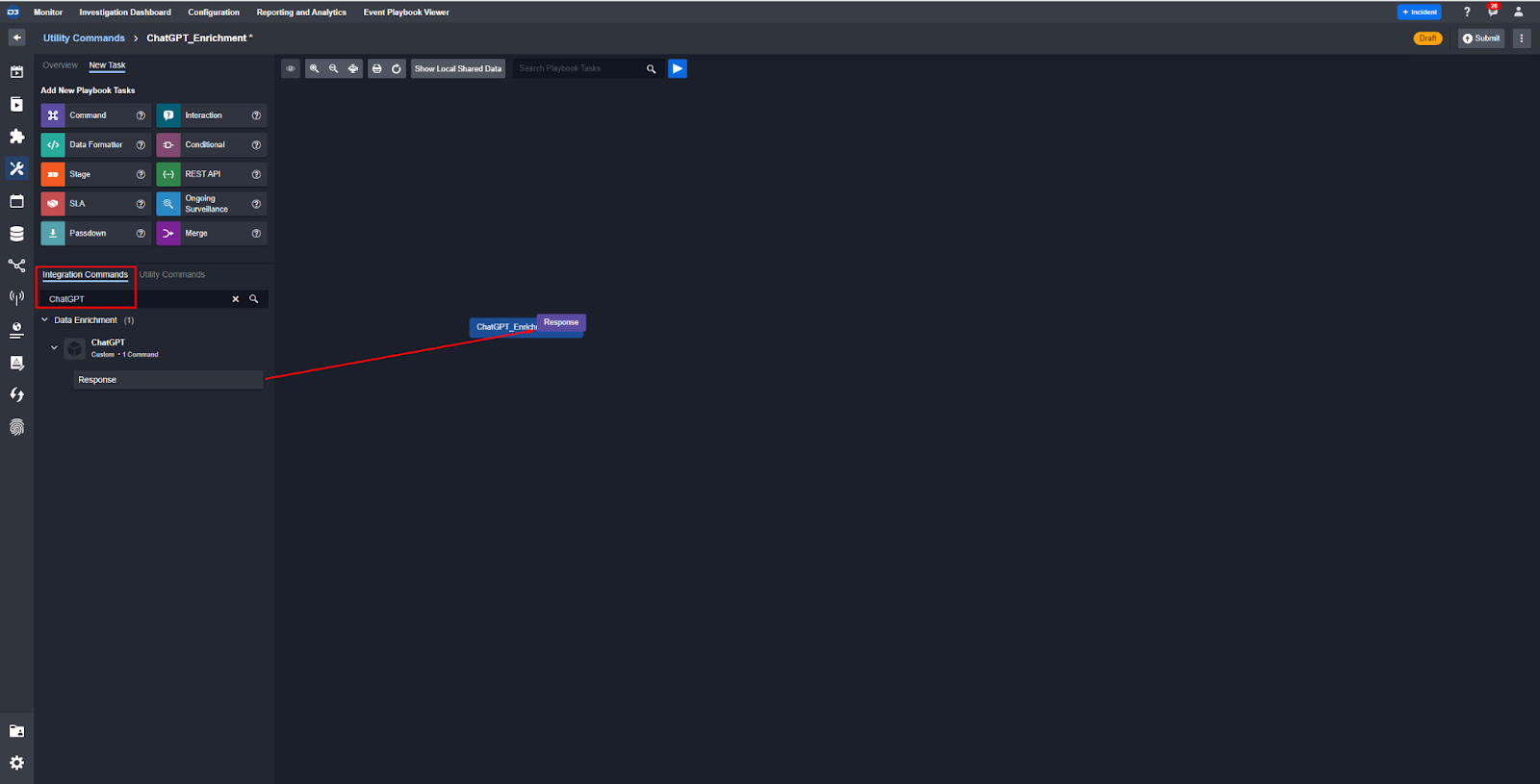

Add another one for the vulnerability request and then a task to push the results to the incident overview:

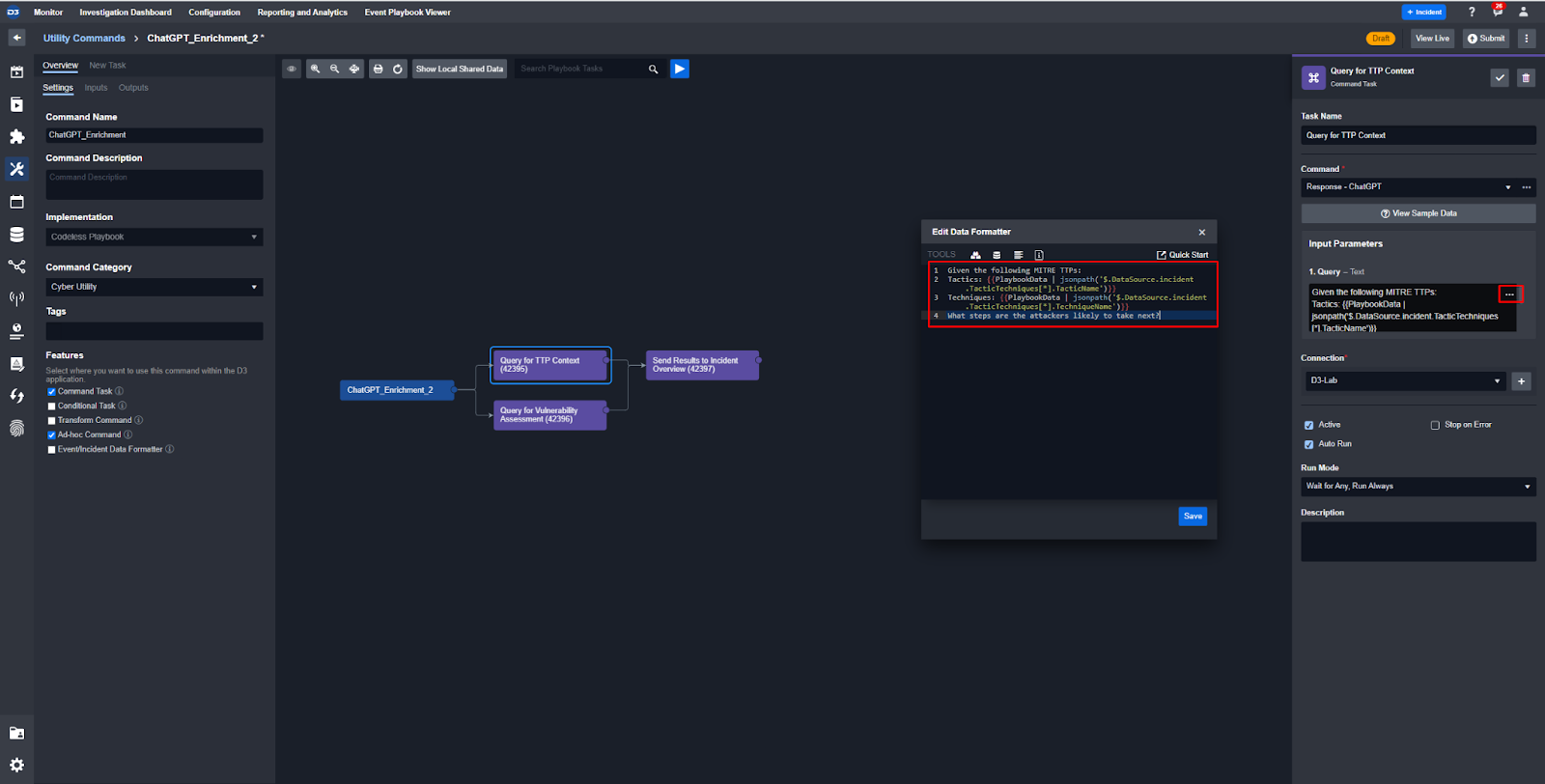

In the Query parameter for each task you can add dynamic variables to make each search relevant to the incident you’re on. Here you can see that the Tactics and Techniques are dynamically populated:

Submit the playbook when you’re done and it will be available for use right away.

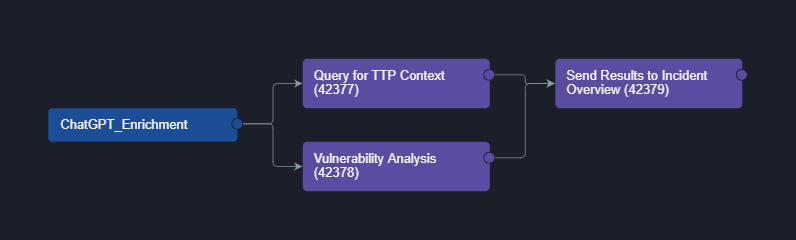

Step 4: Implement the Playbook

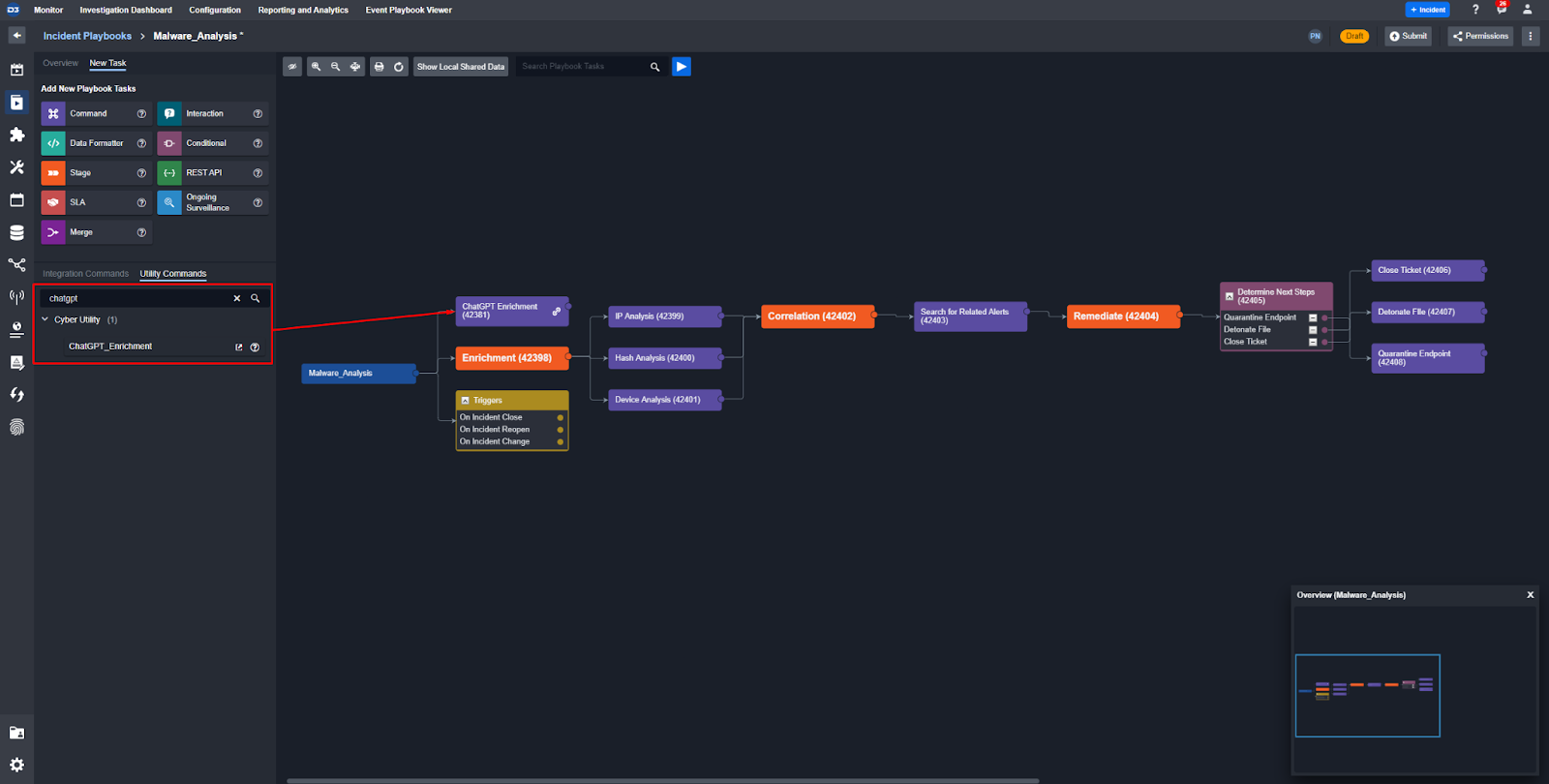

Choose an existing playbook and search for ChatGPT_Enrichment in the Utility Command tab. Then drag and drop it into the playbook builder. Here we’ve added it to an existing malware investigation playbook:

Since we built the workflow as a utility command, we can drag and drop it into any playbook without having to rebuild it. This modular playbook design is one of the unique capabilities of Smart SOAR.

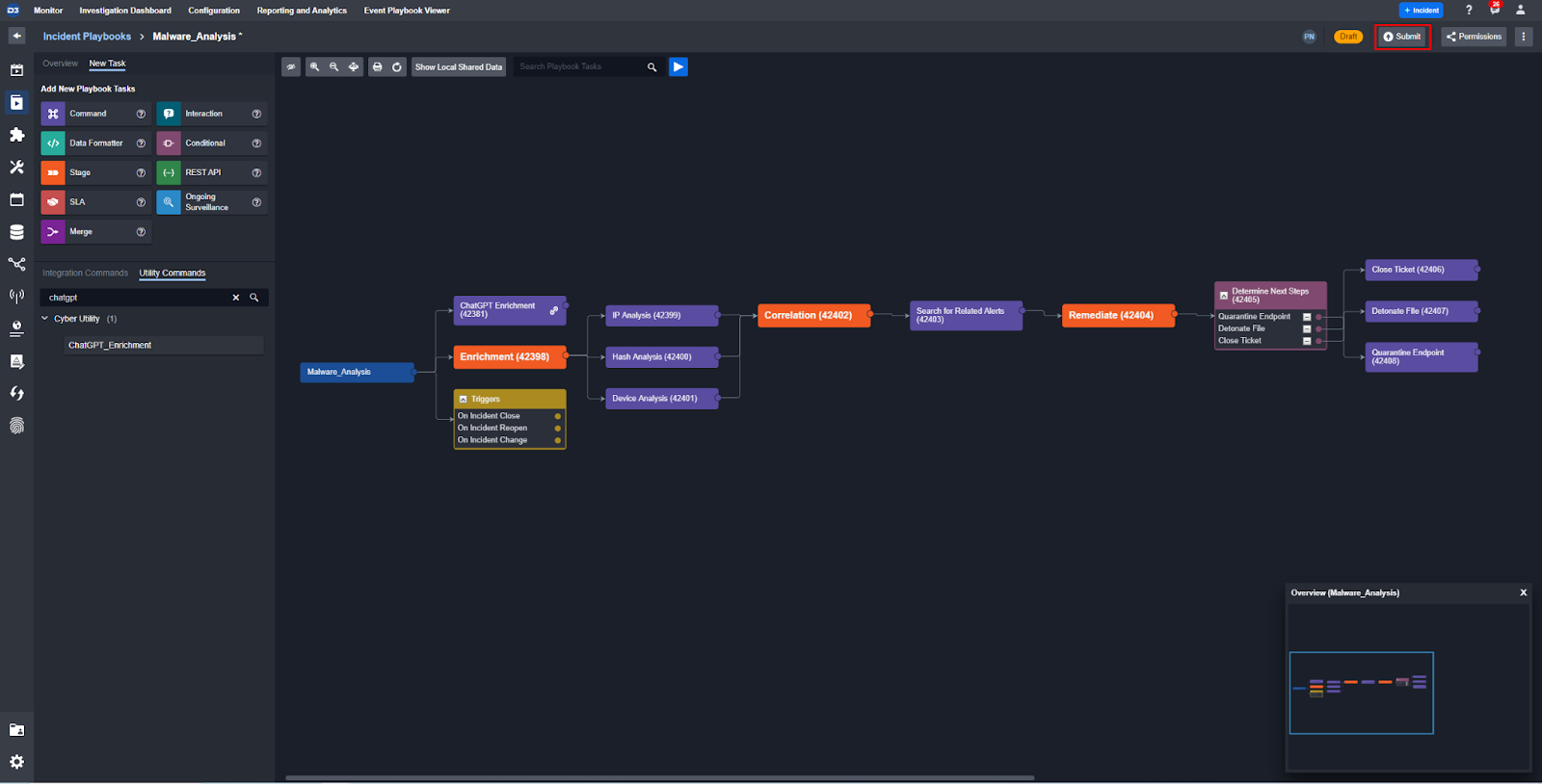

Click ‘Submit’ in the top-right and the playbook will be good to go.

In the incident you will see the results from ChatGPT populated into the Incident Overview:

Additionally, your team can run the command ad-hoc if they want to query the API at any point in the investigation.

A disclaimer for those using ChatGPT for SecOps: ChatGPT is mostly spot on in its suggestions and analysis, but is also capable of generating outputs that are totally wrong. This phenomenon that affects all large language models (LLMs) is referred to as as “hallucinations”. That’s something you should be aware of when relying on it to make important decisions. That said, new updates to these LLMs keep reducing the error rate. Also, be careful of what data you’re sending to ChatGPT when handling sensitive investigations.