There’s a particular kind of conference attendee who makes SOC vendors nervous. They walk up to the booth, lean in, and say: “I want to fully automate everything. No human in the loop.”

That’s when you know they aren’t really a serious prospect.

Full automation sounds appealing. Who wouldn’t want AI handling 100% of their alerts while the team focuses on strategic work? But here’s the reality check that keeps CISOs up at night: you don’t want to automate everything in cybersecurity. AI is a force multiplier, but it needs to be pointed in the right direction.

The question isn’t whether to automate. It’s what to automate, what to augment, and what to keep firmly in human hands.

AI in the SOC promises faster triage, better coverage, less burnout. But fully automating security operations creates its own risks: misclassifications, hallucinations, actions taken without context.

D3 Security’s recent webinar brought together industry experts Philip Beck (Director of Sales Engineering), and Chaitanya Pentapati (Senior Sales Engineer), and Amy Tom (Community Manager), as they tackled this exact tension on striking the right balance between automation, transparency (“Glassbox AI”), and human oversight.

They laid out D3’s framework for implementing AI in the SOC: what to automate, what to gate behind human approval, and how to maintain visibility into AI decision-making. They also explained why the “automate everything” crowd might want to pump the brakes.

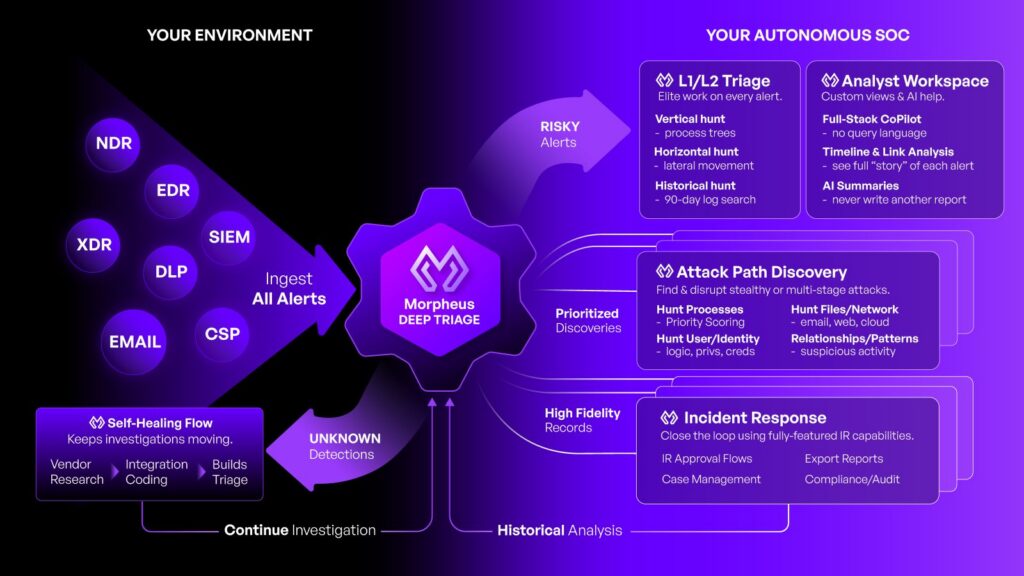

Three tiers of AI SOC automation

Most AI SOC vendors love talking about speed and scale. What they’re less eager to discuss: where the guardrails go. Beck broke it down into phases that mirror how mature SOCs are actually deploying this technology:

Phase One (Fully Automated): This tier targets the low-hanging fruit: those low-risk, high-volume alerts that are 99% noise. They cause the most burnout, and 95% of them can be triaged in under two minutes through automation.

At this level, you have a human-on-the-loop model. A supervisor reviews metrics and spot-checks decisions, but the AI handles the heavy lifting. No alert gets buried in a queue because an analyst was swamped.

Phase Two (Potentially Fully Automated):

As you move into medium-risk alerts, context matters more. These alerts often involve multiple tools and require the AI to build timelines and attack paths.

Here’s where the one-click approval model works well. The AI does the investigation, builds the timeline, surfaces evidence, and says: I found this, I verified this, and I recommend this action. Do you approve?

The analyst reviews, clicks approve, and the AI executes, removing the grunt work so humans can focus on decisions.

Phase Three (Human in the Loop Required): This tier exists for a reason. Some actions carry business impact that no organization should hand to AI without oversight.

Actions like disabling user accounts, revoking access, resetting credentials, isolating systems. Anything high-impact or irreversible.

The third tier exists to define where your risk tolerance actually sits. You need gated approvals, role-based permissions, and explicit human sign-off before anything gets isolated, disabled, or blocked.

Which AI SOC actions need human approval?

Not everything belongs in the “automate it” bucket. These five categories should stay behind an approval gate:

1. Disabling user accounts

Sounds straightforward until the AI disables the CEO’s account during a board presentation because it flagged unusual login behavior from a hotel in another time zone.

2. Revoking access

Access changes cascade. Revoking the wrong credentials at the wrong time can lock out entire teams or break production workflows.

3. Resetting credentials

Forced credential resets disrupt users and can trigger support ticket avalanches. AI should recommend, not execute.

4. Isolating systems

This is where things get business-critical. Isolating a POS system means revenue stops. Isolating a production server means operations halt. These decisions need human judgment about business context that AI doesn’t have.

5. Modifying firewall or routing policies

Broad routing changes can blackhole legitimate traffic. One misconfigured rule can take down connectivity for an entire segment. AI should surface recommendations, but humans should pull the trigger.

Transparency: from black box to glass box

Beck framed D3’s approach as turning AI from a “black box” into a “glass box”—where users can see what the AI did, why it did it, and what guardrails prevented it from doing something else.

In Morpheus, that means opening up everything the AI generates for review:

“You can review it line by line or send it to external systems to confirm every decision.”

This matters for two reasons. First, analysts need to trust the system before they’ll rely on it. Second, when something goes wrong, you need an audit trail. “The AI did it” won’t hold up in a post-incident review or a compliance audit.

Pentapati framed it this way: “Without a chain of thought, you can’t trust the result. Which log line triggered the action? Which threat intel feed correlated? What decision tree did the system follow? If you don’t have answers to those questions, you’re just replacing alert fatigue with confusion.”

“If you’re replacing burnout with confusion, what are you really doing?”

The transparency layer gives you visibility into the reasoning behind every automated decision. It lets you tune the AI’s decision-making to match your SOPs. In fact, D3 allows customers to update their organization’s Morpheus AI model, so that staff knowledge can be incorporated over time.

That last point matters more than people realize. The analyst role, like any workforce, is evolving. Morpheus accelerates the shift into higher impact work. Auditing AI actions at scale and refining the Morpheus LLM enhances the attack path discovery-based approach.

What to look for in an AI SOC platform

If you’re evaluating vendors in this space, a few questions cut through the noise. Here’s what the D3 team suggested:

Decide what problem you’re actually solving. Faster triage? Fewer alerts? Smarter decision-making? Different models have different reasoning strengths.

Understand the automation depth. Some vendors stop at tier-one automation. D3 goes through tier one, tier two, tier three, and assists the entire lifecycle. Ask vendors where their ceiling is.

Ask about the agent architecture. Some platforms deploy multiple agents in your environment that you’ll need to manage yourself. Understand the operational overhead before you sign.

Check if it’s end-to-end. Can you triage, enrich, investigate, respond, and document in one platform? Do you still pivot between systems? If you buy an AI SOC tool, do you still need SOAR? If yes, why?

Understand decision-making vs. suggestions. Does the AI make decisions or recommendations? If it makes decisions, can you see why? Can you audit them? Can you refine the logic so your work today makes tomorrow’s work better?

Don’t automate what you can’t explain

Effective SOC operations is the goal. Full automation for its own sake will get you burned. The webinar audience’s poll results showed that incorrect actions due to misclassification is a top concern. Addressing that means knowing where to put the guardrails, and building transparency into every layer. And it means adopting AI in phases that build trust rather than anxiety.

Morpheus AI is about 70-80% framework and guardrails, with only 20-30% being the LLM itself. The framework guides the AI and builds the attack path. It also prevents drift. You’re not just throwing prompts at a language model and hoping for the best, but leveraging AI with explicit human-in-the-loop checkpoints for high-impact actions

Whether you’re evaluating Morpheus or building your own AI SOC strategy, the underlying principle holds: start with noise, build toward trust, never automate what you can’t explain, and always leave yourself a way to refine the logic.

D3 Security will be at RSA Conference. If you’re attending, book a meeting for a personalized demo and some solid swag. Have questions about implementing AI in your SOC? Reach out to the D3 team