Every SOC leader is hearing the same promise right now: “Drop an AI agent on every alert and watch triage take care of itself.”

It sounds perfect. Until you see the cloud bill.

A recent field experiment by a security engineer using Google SecOps put the idea into production-like conditions. He connected a custom agent workflow to SecOps alerting, then let it run across incoming alerts. Triage quality was excellent. Playbook building dropped sharply.

Then the billing anomaly alerts started. His takeaway was not that the approach failed. It worked. The takeaway was that the cost profile makes it very experimental right now, and he ultimately chose to turn it off.

Treat that as a design signal. AI in the SOC needs limits, or it turns into open ended spend.

Here is why free running agents get expensive at scale, and how D3’s Morpheus keeps autonomy bound.

The Experiment That Blew Up the Bill

The engineer’s journey followed three fast phases that every SOC leader should study.

Phase 1: Safe and predictable.

He started by embedding LLM calls inside classic SOAR playbooks. The AI handled summaries and query hints, but the playbook remained in charge.

Phase 2: The “Agent-First” pivot.

Next, he pushed the investigative work into the agent. He wrapped it as a SOAR action, added it to a playbook, and had the agent execute the triage steps autonomously. The experience felt smoother, and the output looked like a human investigation log. So far, so good.

Phase 3: The cost curve kicks in.

Within a few days, Google Cloud billing anomaly alerts fired. Vertex AI usage spiked hard after the shift to an agent doing the work.

His explanation was simple and brutally important. When an agent performs its own work, every action it takes to reach an answer is metered. You can refine prompts to narrow scope and reduce spend, but at some point that starts to resemble the structured workflow you were trying to avoid in the first place.

The value story collapses when cost becomes unpredictable.

Key AI SOC Takeaways

Unconstrained agents are cost amplifiers.

Give an agent wide latitude and it will do more steps to be thorough. That can be great in a lab or a low volume queue. At thousands of alerts per day, it becomes a cost multiplier.

“Agent on every alert” is not a strategy.

SOC work still needs structure. You need guardrails, stopping conditions, tool scopes, and a clear intent per alert type. Without scope control, agents keep pulling context to be thorough, and on high volume noise that turns into a pile of extra calls.

Cost volatility becomes a buyer risk.

Even if outcomes are strong, leaders will ask who carries the risk when usage and costs drift over time. If the strategy is “let the agent figure it out,” that answer is hard to defend.

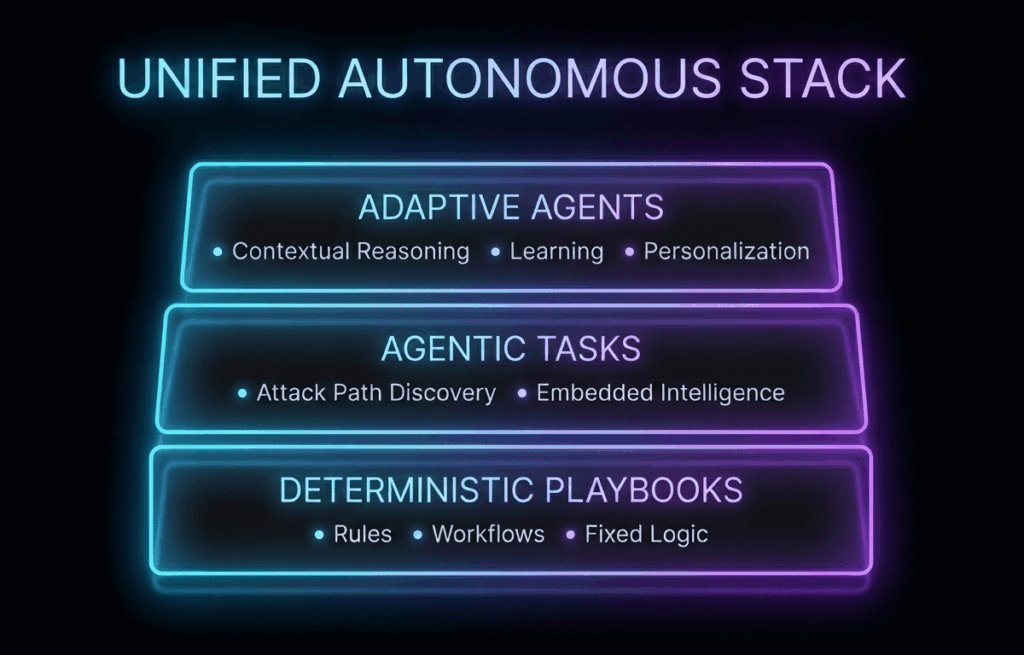

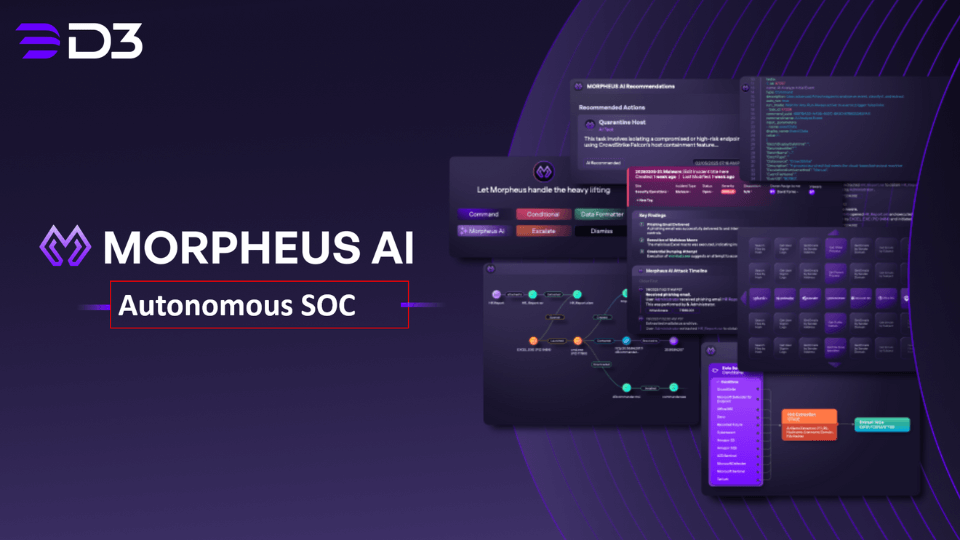

The Morpheus Approach. Agent Brain, Deterministic Shell

Agents are embedded inside a structured workflow built on established automation and case management. Morpheus operates on a three-layer stack designed for cost-aware autonomy:

- Deterministic playbooks at the base.

- Intelligent, agentic tasks inside those playbooks.

- Adaptive agents operating on top of full incident context.

The Intelligent Task is the Key

Inside a standard Morpheus playbook, one special task can think for itself. It can read live results, call tools with new questions, and branch if data looks new. But the surrounding shell still controls start, finish, tool scopes, approvals, and maximum effort.

You keep the reliability of a scripted playbook. You gain a slot that adapts. Instead of handing over an alert to a free-running agent that burns your budget, you can cap investigation depth and keep runtime under control.

Cost Aware Autonomy, Built In

The SecOps experiment surfaces two practical requirements for real SOC deployment. First, keep agents from doing deep work on cheap alerts. Second, shield buyers from surprise bills when scale arrives. Morpheus is built to address both.

Flat Pricing, Bounded Work

We aim to keep per alert work within a sane envelope. Shared correlation means you do not pull the same context for every sibling alert. Intelligent tasks run inside bounded workflows, not open loops.

Smart Resource Allocation

We push heavy model effort where risk justifies it. Simple alerts stay on deterministic rails with minimal AI hops. High value incidents trigger deeper hunting across broader context. Autonomy stays under control because scope is intentional.

Guardrails, Because Liability Matters

In the experiment above, the engineer used an (Agent Development Kit) ADK Web based setup that is intended for testing and does not ship with strong security defaults. That is great for exploration. It is not where you want to live for production SOC workflows.

Morpheus takes a production-grade route:

- GitOps: Playbooks live as YAML, sync to GitHub, and pass unit tests before shipping.

- Guardrails: Agents work under scoped tools and explicit approvals for risky actions.

- Audit Trails: The system records exactly how the agent thought, what it called, and what changed.

Instead of a black box agent, you get a platform that treats security operations like production code.

Agentic triage can work. The difference between a win and a runaway bill is structure, limits, and visibility. If you want to see what that looks like in practice, book a Morpheus demo.