There’s a weird tension in the security community right now (OK, we admit it. Weird tension in security is a feature, not a bug).

On one side, SOC teams want an AI SOC they can actively govern. A system that exposes its investigation logic and can be tuned to reflect the tools, data quality, and risk tolerances of their environment.

On the other hand, people still have scar tissue from trying to build “the perfect SOAR” and ending up with a fragile maze of playbooks that only two people understand.

So the anxiety sounds like this:

“If I demand real control over my AI SOC, am I signing up for another layer of complexity and maintenance hell?”

In our recent white paper on blending deterministic workflows with AI, we argue that this is the key design question for the next generation of SOCs, especially in large enterprises and MSSPs. Security automation practitioner Filip Stojkovski hits the same nerve in his Cybersecurity Automation blog post, “AI SOCs You Can Actually Control and Customize.”

Let’s unpack where that anxiety comes from, and how a hybrid “autonomy with guardrails” model can give you more control and less complexity, not more of both.

Two Ways to Lose Sleep: Playbook Sprawl vs Black-Box AI

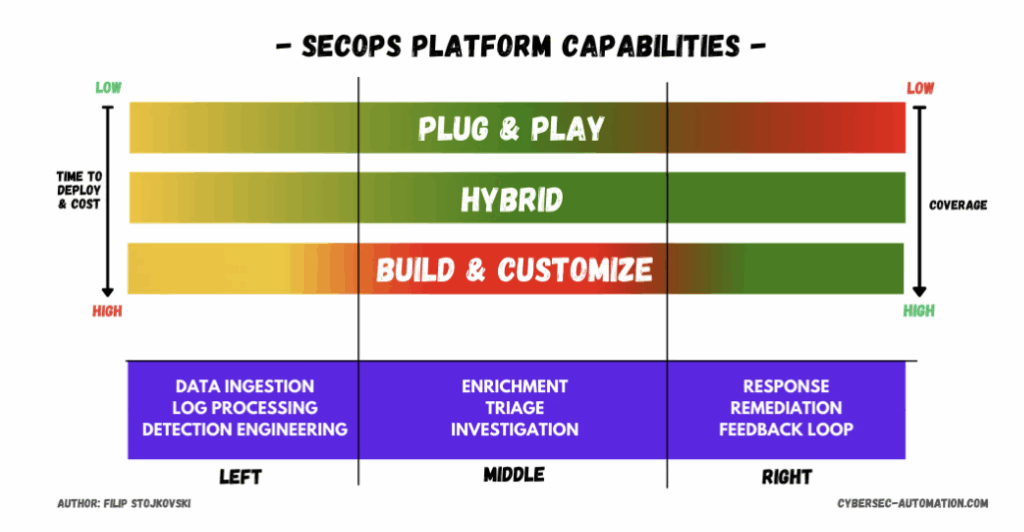

Filip describes two main implementation styles he sees in the wild: build-and-customize platforms and plug-and-play AI SOCs. They map eerily well to the two extremes we see when talking to SOC leaders.

1. The Build-and-Customize Trap

Build-and-customize platforms feel like classic strategy games: you start with an empty map and design everything:

• Every integration

• Every enrichment step

• Every deterministic branch in the workflow

You get maximum control, and you inherit all of the complexity.

Filip describes a real SOC that had over 200 automations, each tied to a specific detection. Any time a vendor changed an API or log schema, playbooks broke. They ended up needing more engineers than analysts just to keep workflows alive.

That lines up with what we called “rule and playbook explosion” in the white paper: deterministic workflows are fantastic at predictable tasks, but at scale they become brittle and expensive to maintain. A big chunk of expert time goes into keeping the automation alive instead of actually responding to incidents.

2. The Plug-and-Play Black Box

At the other extreme are plug-and-play AI SOCs:

• You hook up your SIEM or log sources.

• The platform starts clustering and triaging.

• It might even run some containment.

Fast time-to-value, minimal configuration… but no clear view of the logic. Filip calls this the “strategy RPG” model: you’re playing in someone else’s pre-built world with someone else’s rules.

That leads to a different kind of anxiety:

• What is it suppressing that I would care about?

• How do I know it’s not auto-closing something important?

• How do I explain these decisions to audit, customers, or my own leadership?

You traded implementation complexity for governance complexity. That’s not a great deal either.

Why Both Extremes Create AI SOC Anxiety

From a distance, build-and-customize vs plug-and-play looks like a technical choice. Up close, it’s a psychological one.

Build-and-customize gives you the illusion of safety through control, until you realize your workflow library is held together by a few overworked engineers and a Confluence space.

Plug-and-play gives you the illusion of safety through vendor magic, until you need to challenge or adjust a decision and discover you can’t.

Our white paper frames this as a deeper architectural problem: we’ve treated automation either as purely deterministic code (SOAR-style playbooks) or as purely AI-driven systems (black-box AI SOCs). Neither alone can handle the messy middle of the incident lifecycle: enrichment, triage, investigation, without either exploding in size or becoming opaque.

The result is entirely rational anxiety: “If I don’t control it, I can’t trust it. If I do control it, I’ll drown in it.” So what’s the out?

A Third Option: Autonomy with Guardrails

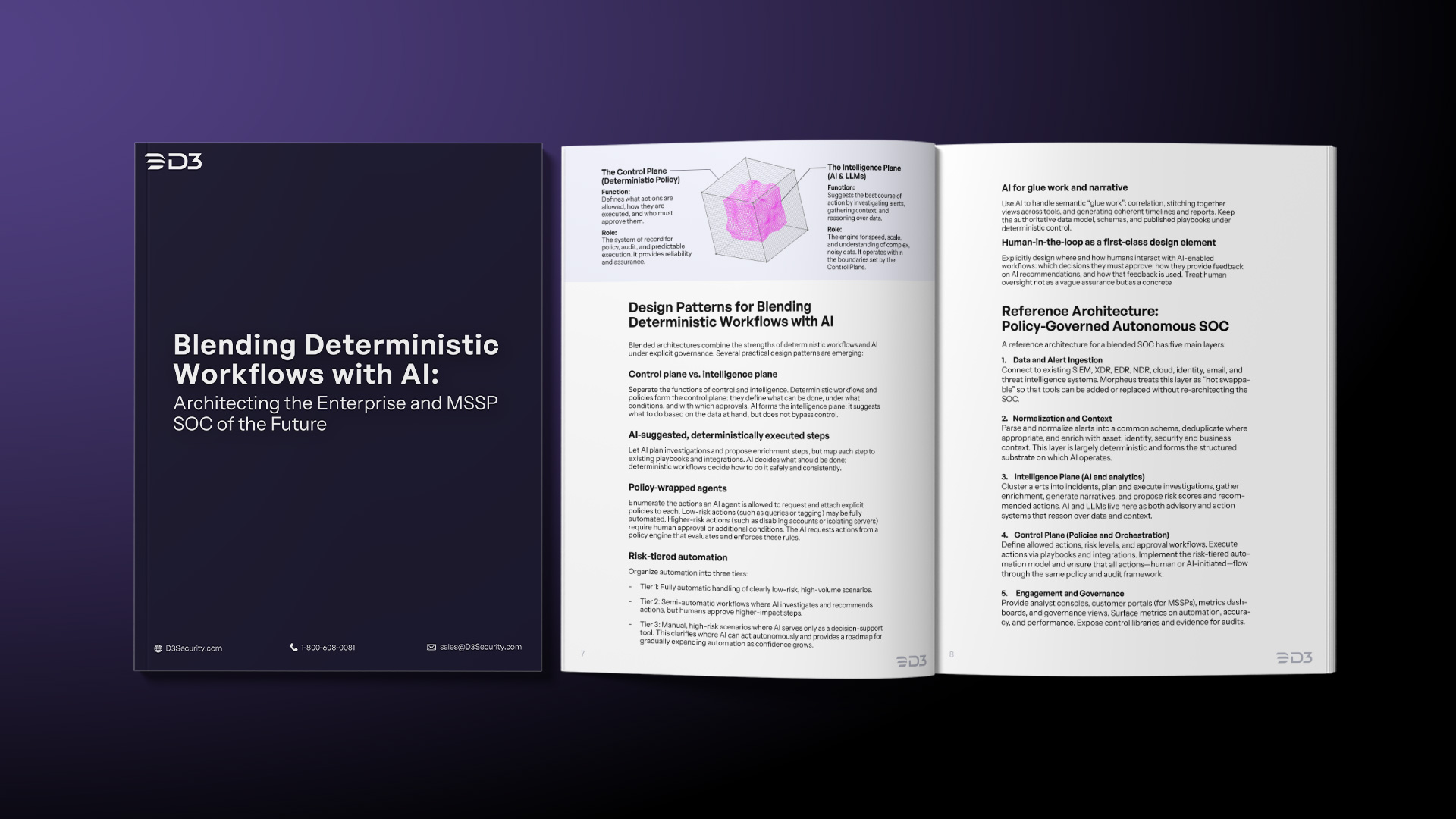

Both our white paper and Filip’s article converge on a hybrid: AI autonomy inside deterministic guardrails.

Filip explains it with a relatable PC gamer analogy:

• City-builder phase: You decide the rules of the world. Set up integrations, define critical assets, and express guardrails like “any action on domain controllers requires human approval.” This is your deterministic control plane.

• RPG phase: Autonomous AI agents operate inside that world. They investigate alerts, pull enrichment, build hypotheses, and suggest remediation: but stay on the “roads” you laid down. That’s your AI intelligence plane, plus human-in-the-loop.

In our white paper, we use slightly different language but the same idea: deterministic workflows and policies as the control plane, AI/LLM agents as the intelligence plane, and humans providing governance and escalation.

The key insight: you don’t try to script every investigation step as brittle playbooks, nor do you hand the keys to an opaque model. You express what must always be true (policies, approvals, no-go zones), let AI decide what to do inside those constraints, and make all of that observable and editable so your team can inspect and improve the logic.

How This Reduces Both Control and Complexity Debt

“More control means more complexity” is true if your control is expressed at the wrong level of abstraction.

If your control model is “write a new playbook for every detection,” you’re effectively handwriting assembly code for a changing environment. Of course complexity explodes.

But if your control model is policies and automation tiers, where policies define what must always be true and tiers define how far automation is allowed to go, then you can let the AI generate and adapt the actual investigations on top of that.

You’ve moved control up from thousands of tiny playbook decisions to a manageable layer of policies and tiers. That’s the core of the blended architecture we outlined in the white paper: deterministic systems provide shape and safety, AI handles the mess and scale.

A Concrete Example: How Morpheus Approaches the Problem

Security vendors talk about this blending in different ways, but Filip’s blog calls out D3 Security’s Morpheus AI SOC as a concrete example of “autonomy with guardrails.” To be clear, that edition of his blog is sponsored by Morpheus, but the analysis and framing are his own, as an independent practitioner.

Morpheus is designed as an overlay AI SOC:

• It ingests alerts from your existing stack SIEM, XDR, EDR, cloud security, email gateways, etc. via a large integration library.

• It uses a D3-built investigative framework and cybersecurity-focused LLM to autonomously investigate and triage alerts, delivering 100% alert coverage and 95% of alerts triaged in under two minutes in typical deployments.

• Crucially, when you “drop an alert” into Morpheus, it builds a full investigation runbook on the fly and shows it to you. You can see each step, modify the workflow, and audit the logic.

That maps almost one-to-one with the hybrid model: the city-builder phase is where you define integrations, critical assets, and guardrails, and the RPG phase is the AI engine generating investigations, pulling context across tools, building timelines and risk scoring, and recommending actions, all visible in a SOC-native workspace.

Do you have to use Morpheus to get a hybrid AI SOC? No. But it’s a useful reference point when you’re evaluating the landscape: you can ask other vendors, “Can I see the steps your AI takes, edit them, and govern them with my own policies?” If the answer is “no, but trust us,” that’s your anxiety talking, and it’s doing you a favor.

Questions to Ask Yourself (and Vendors)

If you’re feeling AI SOC anxiety right now, a few questions help distinguish real control from cosmetic control:

1. Where is your control expressed? At the level of hundreds of playbooks, or at the level of policies and automation tiers?

2. Can you see and edit the AI’s plan? When an alert comes in, can you view the investigation steps, or do you just see a verdict?

3. What’s the blast radius of a mistake? Is every action mediated by policies and approvals, or can an LLM on a bad day lock out admins?

4. How much builder debt are you accumulating? Are you buying a platform that quietly turns you into the builder, or one that generates workflows on top of your guardrails?

5. Can you explain it to your regulator or customer? If an incident goes wrong, can you reconstruct who/what made which decision, and why?

Closing the Loop: Control Without the Panic

AI in the SOC is about to become table stakes. The key question is: can we get the benefits of AI without recreating SOAR complexity or accepting a black box we can’t govern?

The emerging answer is yes, but only if you treat deterministic policies, approvals, and workflows as your control plane; use AI for what it’s good at: messy investigations, correlation, summarization, and triage; keep humans in the loop for high-impact decisions and continuous tuning; and insist on tools that are glass boxes, not black boxes.

If you are curious how that looks architecturally, across enterprise and MSSP environments, our white paper on blending deterministic workflows with AI goes into the details: reference architecture, risk tiers, governance patterns, and how platforms like Morpheus slot in as practical examples.

More control doesn’t have to mean more anxiety. With the right architecture, it can just mean your SOC finally works at the scale you’ve been quietly asking of it for years.